Educators rely on student achievement test performance to provide important information for a variety of inferences they wish to make about education. Educators use test scores to gather information about what individual students know and can do, which helps identify students who need special educational assistance. Additionally, in the aggregate, student test performance has been a key part of school accountability during the No Child Left Behind era, and it has become an increasingly important component of the evaluation of teacher and principal effectiveness.

Educators rely on student achievement test performance to provide important information for a variety of inferences they wish to make about education. Educators use test scores to gather information about what individual students know and can do, which helps identify students who need special educational assistance. Additionally, in the aggregate, student test performance has been a key part of school accountability during the No Child Left Behind era, and it has become an increasingly important component of the evaluation of teacher and principal effectiveness.

Most educators tend to think about and interpret test scores without considering the full breadth of what makes scores valid. It seems simple: give a student a well-made test, the student tries to do his best, and then interpret the resulting score as an indicator of what that student knows and can do. Obtaining a valid test score from a student, however, requires more than just a good test. It also requires an engaged student who devotes enough effort to the test to demonstrate his true level of proficiency. Without sufficient effort, regardless how good the test is, a student’s test score is likely to markedly underestimate what he really knows and can do. This means that such a score alone should not be considered a trustworthy indicator of the student’s proficiency level, and should be interpreted cautiously.

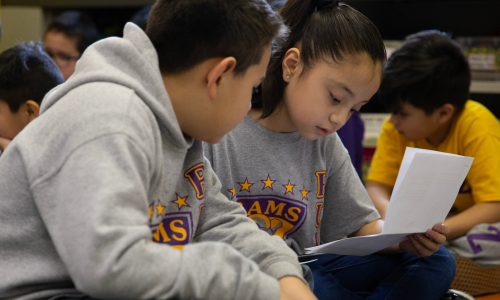

No assessment—no matter how carefully designed—is immune to this problem. Despite best efforts to ensure the highest quality of assessments, educators can’t control the behavior of the students who are tested. While it is true that most students are engaged when they are being tested, some are not. Why not? To understand this better, it is helpful to first turn the question around and consider why any student gives good effort to a test.

Students are highly likely to remain engaged and give good effort whenever there are meaningful personal consequences associated with their test performance. That is, they try because they want to attain something they value, such as a good grade, high school graduation, a scholarship, etc. Moreover, even in the absence of personal consequences, most students will still give good effort to a test. In these cases, internal psychological factors are operating, such as a desire to please their teachers, a fear of punishment for not giving good effort, competitiveness, or an intrinsic motivation to solve problems that are put before them.

Test-taking engagement is most likely to be a problem when students perceive an absence of personal consequences for test performance. This is called low-stakes testing (as seen from the student’s perspective). In these situations, students’ internal motivational factors may not be strong enough to maintain test-taking engagement. But even in high-stakes testing situations, students can become disengaged during testing due to such factors as fatigue, illness, personal problems, or a general disenfranchisement with school. Thus, while disengagement is most likely during low-stakes testing, it can occur during high-stakes testing as well.

For the past dozen years or so, I have researched student test-taking engagement, and we have learned a lot about its dynamics. Along with my colleagues, we have pioneered methods for detecting low test-taking effort on computer-based tests. We have developed criteria for identifying test events for which effort was so low that the resulting test scores are invalid. Using these criteria, we have discovered a number of important relationships about student engagement with our Measures of Academic Progress® (MAP®) assessment. These include findings that:

- Boys exhibit scores reflecting low effort on MAP about twice as often as do girls. For some reason, this finding surprises no one.

- Low test-taking effort is about twice as likely to occur on a reading test event as on a math test event. This is because reading test items tend to contain more words, which students view as requiring more effort to answer.

- The prevalence of low-effort scores increases with grade. In one study, we found that about 1% of the MAP scores reflect low effort at grade 2, with a gradual increase to about 15% in grade 9.

- The time of day that MAP is given is important. The percentage of low-effort scores gradually increase during the day, with students tested late in the day exhibiting low effort about twice as often as those tested at the beginning of the day.

Although these findings are based on MAP scores, there is no reason to believe that similar findings wouldn’t be found with other educational assessments as well.

So, given this problem, what should test givers do? First, they should be mindful of the student’s perspective regarding the test. That is, even though the test may be extremely important to test givers (i.e., when used for school accountability or teacher evaluation), the test may be of limited importance to students. Moreover, they should be aware that how, when, and where a student is administered a test matters. Students should always be given encouragement from their teachers to give their best effort, and the importance of getting valid test scores should be clearly explained. The test should be administered in quiet settings that are free of distractions, and there should be no incentives to students to rush through the test (such as going to lunch or recess immediately afterward). Ideally, students should be tested earlier in the day (to the extent possible).

The general goal is to administer tests in such a way that maximizes student engagement throughout their test events. Although we can’t eliminate disengaged test taking from occurring, we can try to minimize its impact.