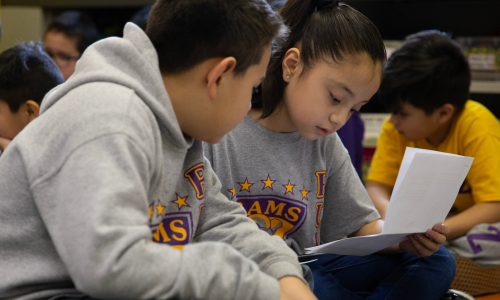

As fall is in full swing, and most schools and districts are either testing or starting to view results, we thought it was timely to share some findings from NWEA Research on average test duration. The MAP Growth assessments are untimed, and as such, there is latitude during administration of the tests for students to work at their own pace. When a challenging question is presented, the student can consider the response, or with some items, use a manipulative like a calculator to work out the answer without the pressure of being on the clock.

As fall is in full swing, and most schools and districts are either testing or starting to view results, we thought it was timely to share some findings from NWEA Research on average test duration. The MAP Growth assessments are untimed, and as such, there is latitude during administration of the tests for students to work at their own pace. When a challenging question is presented, the student can consider the response, or with some items, use a manipulative like a calculator to work out the answer without the pressure of being on the clock.

By using an untimed test, the proctor in the room has some level of obligation to monitor the students’ progress and make a determination if there’s a need to pause a test to pick it back up at another time.

My colleague, Dr. Steven Wise, has written about the work NWEA Research has done to unpack the relationship between student engagement and the validity of MAP Growth scores. Last fall, we introduced notifications in the MAP Growth assessment that help proctors monitor for students who may be “rapid guessing,” or demonstrating the behavior of quickly choosing an answer faster than they could have had time to fully read and understand the challenge posed by the item. Last week, I wrote about how our research on rapid guessing informed our policy on invalidating MAP Growth tests when a student rapid-guesses on 30% or more of the items.

But what if the issue is not a question of student effort, but rather a lapse of good testing practices? To answer this, NWEA Research wanted to dig in a bit on the relationship between administration practices and test integrity.

Now, when I refer to test integrity, I’m referring to the question of whether a test score is a legitimate estimate of a student’s ability, or if it were produced under conditions that could over- or under-represent that ability. Examples of conditions like this would be allowing students to take multiple hours to complete the assessment in a single sitting, frequent interruptions or pauses initiated by the proctor, or frequent retesting of students. Educators also need to attend to the consistency of their practices across different test administrations. For example, completing fall tests in a single sitting, and then giving the spring test over four or five sittings compromises the integrity of a growth score because conditions were not consistent. Thankfully, these examples are not common, but they do happen.

Now, when I refer to test integrity, I’m referring to the question of whether a test score is a legitimate estimate of a student’s ability, or if it were produced under conditions that could over- or under-represent that ability. Examples of conditions like this would be allowing students to take multiple hours to complete the assessment in a single sitting, frequent interruptions or pauses initiated by the proctor, or frequent retesting of students. Educators also need to attend to the consistency of their practices across different test administrations. For example, completing fall tests in a single sitting, and then giving the spring test over four or five sittings compromises the integrity of a growth score because conditions were not consistent. Thankfully, these examples are not common, but they do happen.

One of the areas we focused on is test duration. An efficient measure of student learning shouldn’t have the student away from the classroom for several hours at a time. In general, NWEA expects that students will complete a MAP Growth test in about 45 to 75 minutes, with high-performing students taking longer in some cases. There is, of course, variability depending upon testing season, student grade level, and the subject area of the assessment.

The NWEA Research team studied the test durations and changes in test durations between terms for grades K-10 and have documented the averages and benchmarks for more unusual times to help inform our partners. The results are now available to MAP Growth users both as a report and a data visualization. While the average durations are not strict requirements, they do offer some ranges and comparative data to consider.

At NWEA, our mission is “Partnering to help all kids learn.” The goal of our work and our assessments is to help educators help students improve their learning, not their scores. In other words, improved scores should follow improvements in learning, and not be an end to themselves. One of the great joys I have in working at NWEA is knowing that we are committed to that mission and improving learning. When used properly by dedicated and smart educators, our assessments show what students know and the progress in learning that’s happening.