The subject line on the email from my daughter’s third-grade teacher read, “Totally baffled.” The source of the confusion? Winter test scores.

The subject line on the email from my daughter’s third-grade teacher read, “Totally baffled.” The source of the confusion? Winter test scores.

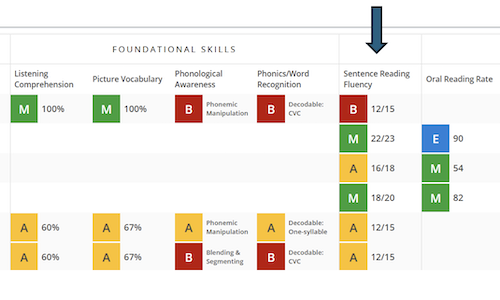

It was mid-January, and my daughter’s class had taken the winter MAP® Growth™ math assessment that day. Her teacher was aware that I worked for NWEA and that I had used MAP Growth data in my own classroom when I was teaching. She’d even come to me in the fall with some questions about understanding and utilizing her class summary data. This time, however, the question she had was about an individual student whose score had dropped between fall and winter testing: my daughter.

Digging deeper

I’ve addressed the question about what it means when scores go down with many parents, guardians, and educators. I’ll admit, however, that it felt a little strange to be explaining it as the parent, to the teacher, instead of the other way around.

My daughter’s score had gone down four RIT points, the email said. Four points is not too much greater than the standard error of measure, so I wasn’t all that alarmed. But I still wanted to know more about what happened.

I asked the same questions I ask whenever I see that a student’s winter test scores have gone down:

- Did the student take much less time on the winter test than in the fall?

- Did the student seem distracted or was there anything going on that day or during testing that may have made it hard for them to do a good job?

- How did the student feel about how they did?

- Is the student learning? That is, does the teacher see evidence in class that they are making progress on the skills they need?

1. Did the student take much less time on the winter test than in the fall?

Often, when winter test scores drop, the test duration time helps tell the story.

Maybe the student took 45 minutes to complete the test in the fall but rushed through the winter test and finished in 20 minutes. Or perhaps there’s evidence of rapid guessing, which can contribute to how long it takes a student to complete an assessment. In either case, the drop in the score was likely because a student was not necessarily trying their best.

In my daughter’s case, the MAP Growth reports showed that she had actually taken longer on the second test than she had on the first.

2. Did the student seem distracted or was there anything going on that day or during testing that may have made it hard for them to do a good job?

For my sweet, creative, social, and often scatterbrained child, I knew this was a distinct possibility. I would not have been surprised to find out that she’d been whispering with a friend or drawing pictures of animals on scratch paper during the assessment. This time, however, her teacher said the opposite was true: in the fall, she’d been off task a lot, but for the winter test, the proctor reported that my daughter had been “not very wiggly” or off task.

I was certainly glad to hear it, even if it didn’t help explain the test score.

3. How did the student feel about how they did?

I taught upper grade students (grades 5–8), and when I talked with my own students about their test scores, I’d often start by asking how they felt about how they did—no matter what their scores were. Hearing their perspective was often very informative.

I remember one student who seemed unhappy when we sat down to talk about his score. He said that the test had seemed really hard and that he’d struggled. He didn’t think he had done well at all. Looking at his scores, however, it turned out that he had grown several RIT points beyond his growth projection. The test felt harder because it was harder! As the student began to get more difficult questions correct, MAP Growth adapted the difficulty level to better match his new skills and he had gotten into some higher-level questions. He’d actually done exceptionally well! He left that conference with me with a huge smile on his face.

In my daughter’s situation, she’d been smiling, too. Her teacher said my daughter was very proud of how she had done on the test. And her teacher was proud of her effort and focus, regardless of how the score turned out. So, finally, I asked the most important question:

4. Is the student learning? That is, does the teacher see evidence in class that they are making progress on the skills they need?

The teacher assured me that my daughter was learning, that she had shown a lot of progress in class since the fall. The teacher mentioned some specific concepts they’d been working on and some class assignments that had been recently completed. We decided that we would keep an eye on her but otherwise not worry too much about the drop. We were eager to see if her score went back up in the spring.

In closing

Why, exactly, did my daughter’s winter test scores go down? It’s hard to know for sure, but investigating possible causes can help teachers plan for differentiating instruction and serving the needs of all the kids in their classroom.

As a classroom teacher, I relied heavily on MAP Growth data as a starting point when planning instruction for my students. To learn more about getting the most from the assessment, visit our archive of posts about MAP Growth.