Welcome to the third post in a series of entries about how MAP assessments are aligned to content standards. Previous posts in the series discussed how we ensure test items align to standards and how we ensure tests align to standards. Today’s post, by senior ELA content specialist Mathina Calliope, describes how NWEA’s item development process ensures that our items meet content-integrity, sound-construction, and cognitive-rigor criteria.

Creating a high-quality test item is difficult. Many content specialists think of it as a fun but incredibly challenging puzzle. It’s fun because there’s a creative component to it; it’s challenging because so many pieces need to fit together just so.

Good Items

At NWEA, our business processes are designed to carefully fit together each and every one of those pieces for each and every item we create. Our non-negotiable goals for items are that each one:

- truly measures what it claims to measure (is aligned to a standard);

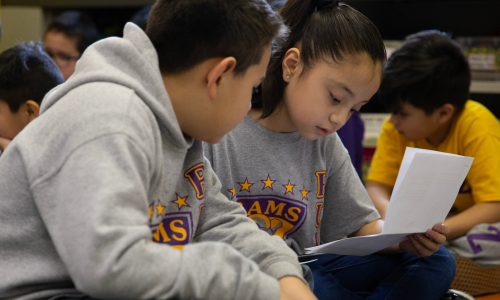

- makes sense to students;

- is equally accessible to all students—regardless of socioeconomic status, race or ethnicity, special needs, English language development levels, etc.;

- mirrors as closely as possible the classroom experience; and of course

- meets all industry standards:

+ has only one right answer;

+ uses clear, concise wording;

+ contains grammatically parallel options;

+ lacks outlier options; and

+ many more subject – and standard-specific requirements.

Development Process

We start with standards. Content specialists carefully analyze state standards to determine whether or to what extent they are assessable in a standardized-testing format. (See this entry for a more thorough discussion of how content specialists “unpack” standards.)

Next is acquisition. We use item writers with proven experience, including outside vendors, individual contractors, or internal staff, who use our detailed item specifications to create raw items, which they submit to us. Our item acquisition team conducts a preliminary review to determine whether the item meets the specification and passes a plagiarism and permissions review.

Then, it moves to the content design team, where multiple content specialists separately and thoroughly review, repair, and improve each item. Content specialists look for (and correct as necessary) principles of universal design, bias/sensitivity violations, alignment to standards, and good item construction. Content specialists’ backgrounds vary, but at NWEA, we are former classroom teachers and pedagogical experts, and have all undergone extensive training in the principles of good item design.

After content specialist review, an item gets one last quality review, including copyediting and making sure the item displays properly on our testing platform.

Finally, before an item goes “live,” it is field tested and must meet minimum standards of statistical calibration—if anything surprising happens in how an item performs in field testing (such as one subgroup of students performing more poorly on an item than other subgroups), the item is scrutinized, revised and re-field tested, or possibly discarded altogether.

Getting the Question Right

It takes a lot of time and effort for an item to “graduate” to Active status and count toward a student’s final score. We give each item that time and attention because we know teachers, students, and parents are relying on the validity and usefulness of the information each item provides. We make sure we get the questions right.