Measurement & scaling

Identifying disengaged survey responses: New evidence using response time metadata

In this study, we condition results from a variety of detection methods used to identify disengaged survey responses on response times. We then show how this conditional approach may be useful in identifying where to set response time thresholds for survey items, as well as in avoiding misclassification when using other detection methods.

By: James Soland, Steven Wise, Lingyun Gao

Robust IRT scaling: Considerations in constructing item bank from tests across years

This study investigates the impact of three different IRT scaling and equating methods in building an item bank of tests from 23 years of a national licensure exam . The study focuses on several key psychometric issues including scaledriftandequatingerrors.

By: Jungnam Kim, Dong-In Kim, Furong Gao

Topics: Measurement & scaling, Computer adaptive testing, Item response theory

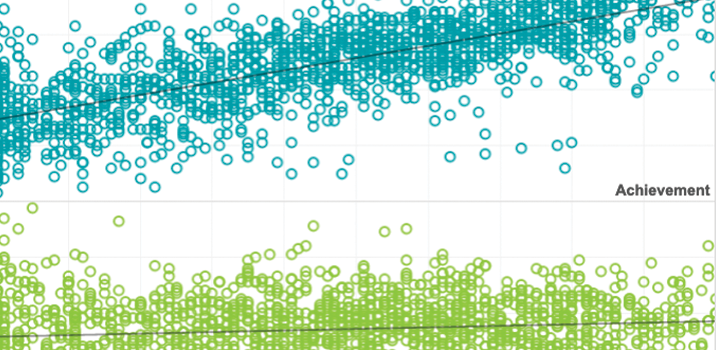

This article addresses the issue by estimating teacher value added, then applying extremely mild nonlinear transformations to the original scale and re-estimating the value added. Although by definition at most one of these scales can be equal-interval, all are treated as if interval-scaled when estimating value added.

By: James Soland

Topics: Measurement & scaling, Student growth & accountability policies

Rapid‐guessing behavior: Its identification, interpretation, and implications

The rise of computer‐based testing has brought with it the capability to measure more aspects of a test event than simply the answers selected or constructed by the test taker. One behavior that has drawn much research interest is the time test takers spend responding to individual multiple‐choice items.

By: Steven Wise

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

A general approach to measuring test-taking effort on computer-based tests

The current study outlines a general process for measuring item-level effort that can be applied to an expanded set of item types and test-taking behaviors (such as omitted or constructed responses). This process, which is illustrated with data from a large-scale assessment program, should improve our ability to detect non-effortful test taking and perform individual score validation.

By: Steven Wise, Lingyun Gao

Topics: Measurement & scaling, Innovations in reporting & assessment, Student growth & accountability policies

A large-scale, long-term study of scale drift: The micro view and the macro view

This study examined the measurement stability of a set of Rasch measurement scales that have been in place for almost 40 years.

Modeling student test-taking motivation in the context of an adaptive achievement test

This study examined the utility of response time‐based analyses in understanding the behavior of unmotivated test takers. For the data from an adaptive achievement test, patterns of observed rapid‐guessing behavior and item response accuracy were compared to the behavior expected under several types of models that have been proposed to represent unmotivated test taking behavior.

Topics: Innovations in reporting & assessment, Measurement & scaling, School & test engagement