School & test engagement

Educators need accurate assessment data to help students learn. But when students rapid-guess or otherwise disengage on tests the validity of scores can be affected. Our research examines the causes of test disengagement, how it relates to students’ overall academic engagement, and its impacts on individual test scores. We look at its effects on aggregated metrics used for school and teacher evaluations, achievement gap studies, and more. This research also explores better ways to measure and improve engagement and to help ensure that test scores more accurately reflect what students know and can do.

This study compared the test taking disengagement of students taking a remotely administered an adaptive interim assessment in spring 2020 with their disengagement on the assessment administered in-school during fall 2019.

By: Steven Wise, Megan Kuhfeld, John Cronin

Topics: Equity, Innovations in reporting & assessment, School & test engagement

Six insights regarding test-taking disengagement

There has been increasing concern about the presence of disengaged test taking in international assessment programs and its implications for the validity of inferences made regarding a country’s level of educational attainment. In this paper, the author discusses six important insights yielded by 20 years of research on this and implications for assessment programs.

By: Steven Wise

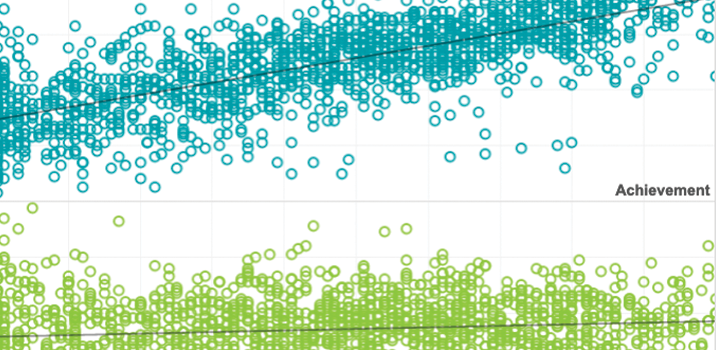

The impact of disengaged test taking on a state’s accountability test results

This study investigated test-taking engagement on a large-scale state summative assessment. Overall, results of this study indicate that disengagement has a material impact on individual state summative test scores, though its impact on score aggregations may be relatively minor.

By: Steven Wise, Jonghwan (Jay) Lee, Sukkeun Im

Topics: Equity, Measurement & scaling, School & test engagement

Variation in respondent speed and its implications: Evidence from an adaptive testing scenario

The more frequent collection of response time data is leading to an increased need for an understanding of how such data can be included in measurement models. Models for response time have been advanced, but relatively limited large-scale empirical investigations have been conducted. We take advantage of a large data set from the adaptive NWEA MAP Growth Reading Assessment to shed light on emergent features of response time behavior.

By: Benjamin Domingue, Klint Kanopka, Ben Staug, James Soland, Megan Kuhfeld, Steven Wise, Chris Piech

Topics: School & test engagement, Innovations in reporting & assessment

A method for identifying partial test-taking engagement

This paper describes a method for identifying partial engagement and provides validation evidence to support its use and interpretation. When test events indicate the presence of partial engagement, effort-moderated scores should be interpreted cautiously.

By: Steven Wise, Megan Kuhfeld

Topics: Measurement & scaling, Innovations in reporting & assessment, School & test engagement

This study uses reading test scores from over 728,923 3rd–8th-grade students in 2,056 schools across the US to compare threshold-setting methods to detect noneffortful item responses. and so helps provide guidance on the tradeoffs involved in using a given method to identify noneffortful responses.

By: James Soland, Megan Kuhfeld, Joseph Rios

Topics: School & test engagement

Comparability analysis of remote and in-person MAP Growth testing in fall 2020

How have COVID-19 school closures impacted student academic growth and achievement? New research using fall 2020 MAP Growth assessment data for 4.4 million students provides new insights, key findings, and actionable recommendations.

By: Megan Kuhfeld, Karyn Lewis, Patrick Meyer, Beth Tarasawa

Topics: COVID-19 & schools, Measurement & scaling, School & test engagement