Journal article

Using retest data to evaluate and improve effort-moderated scoring

2020

Journal of Educational Measurement, https://doi.org/10.1111/jedm.12275

Abstract

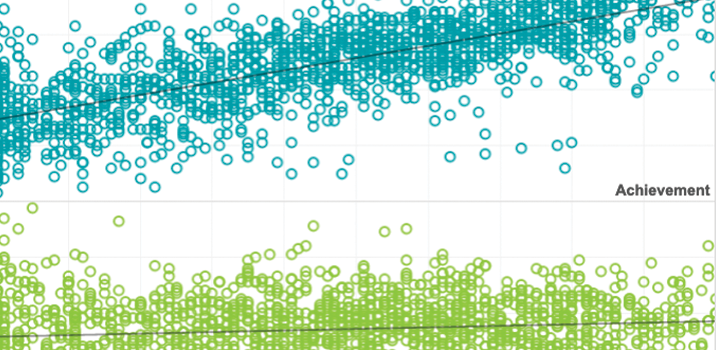

There has been a growing research interest in the identification and management of disengaged test taking, which poses a validity threat that is particularly prevalent with low‐stakes tests. This study investigated effort‐moderated (E‐M) scoring, in which item responses classified as rapid guesses are identified and excluded from scoring. Using achievement test data composed of test takers who were quickly retested and showed differential degrees of disengagement, three basic findings emerged. First, standard E‐M scoring accounted for roughly one‐third of the score distortion due to differential disengagement. Second, a modified E‐M scoring method that used more liberal time thresholds performed better—accounting for two‐thirds or more of the distortion. Finally, the inability of E‐M scoring to account for all of the score distortion suggests the additional presence of nonrapid item responses that reflect less‐than‐full engagement by some test takers.

See MoreThis article was published outside of NWEA. The full text can be found at the link above.